New website

A new look, but also an old approach

We have a new website!

Or should I say "websites"? As well as a fresh new look to this site, we've also created our own video site to host our project videos, plus a bunch of other project websites and internal collaboration tools. All of which are now self-hosted: they run on our own computer hardware, on our own fibre connection, at one of our own locations (well… my house), and are completely managed by me.

There's a few motivating reasons for all of this:

- the old website was getting pretty tired;

- we can save money on servers and subscriptions;

- we've disentangled from some large companies with questionable ethics;

- and because I used to do this.

This last point deserves some more digging into (though you can skip to the bottom if you're just here for the technical skinny).

Prologue

My first proper job as a teenager (not counting dishwashing at a hotel) was as a cable-monkey at a local university. I spent a summer crawling through roof spaces dragging 10base2 Ethernet cables behind me (if you don't know what these are, be happy). This was part of a huge project to wire up the whole campus and install new-fangled "personal computers" into all of the offices. This would bring exciting new tools to the staff, like "word processing" and "electronic mail".

It turned out I was quite good at this computer malarky and so I was promoted the following summer into the systems team that ran the central computers. One of my first projects there was helping out with connecting the university to a new, mostly US network called the "Internet". Around this time, some guy at CERN had come up with a system called the "World Wide Web" that was the hot new thing.

These were heady days. Back then, every institution connected to the Internet had their own servers. There was no central authority, no real plan or design, and yet it all somehow worked. I was fascinated by this hodge-podge of about 100 thousand computers communicating with each other. The key was protocols: all of the computers agreed to talk to each other in the same way. You could compose an email using a program on one computer, push a button and have it hop from server to server across the Internet, passing through a mix of delivery systems, until it popped out the other end and could be read with a completely different program.

The magic of email is right there in the address: the bit after the @ is where in the world to route the message to and the bit before is who to deliver it to when it gets there. Only the final server needs to know who you are, the ones in the middle just need to be able to get the email a bit closer to where you are. This distributed, cooperative approach was baked into the Internet.

Enter the main characters, stage left

The early Internet was mainly governments, universities and some big companies. Then the early-2000s Internet gold-rush happened and suddenly everyone was there. Initially this explosion followed a similar pattern to the early Internet: millions of different servers connected in a big, shonky network; providers constantly appeared and failed, causing confusion and mayhem.

Humans are messy, shambolic creatures, but we crave convenience. It was probably inevitable that in this chaos the main characters would emerge. Rather than having your email provided by the company that supplies your Internet connection, you could just use Hotmail. Rather than creating your own web pages, you could just use MySpace – all your friends and a load of interesting people were there already. There was this new "Face Book" – everyone from school was there too…

Fast forward a couple of decades and you get to where we are now: a handful of gigantic companies control the bulk of the Internet. GMail is now so vast that it is able to single-handedly dictate the rules of email for everyone else. Every other website is actually SquareSpace and we've let Amazon own all of the computers and rent them back to us by the second. Facebook has literally billions of users and has extended its reach to become a sort of planet-straddling surveillance state that monitors most traffic on the Internet. Google has done likewise with search and ads, building an incredibly detailed picture of the habits and tastes of almost every human on the planet. I'd like to say that I've been fighting against this tide, but I've been using the big sites for years too.

Act III

Then Elon Musk bought Twitter.

I liked Twitter, lots of weird and funny people were there – many, my friends – but I could see the bar clientele changing. I'd already become disenchanted with Facebook and was using Instagram less and less. But I still wanted to do social media. A few people I knew were talking about Mastodon and, amazingly, I realised that I already had an account that I'd created years previously. So I put a new profile picture and bio up and started to post.

Mastodon is part of a hot new thing called the "Fediverse". It's a loose collection of different social media sites that use a common protocol: you can join one site using one piece of software, and follow and communicate with people on a completely different site using different software. You have an address, and it says both who you are and where you are.

Sound familiar?

The Fediverse has tens of thousands of sites that you can choose from. The software varies from a range of Twitter-like microblogging programs to specialist picture and video sharing programs, and Reddit-like forums. There are dozens of different, mostly-compatible phone apps you can use. The key common feature, though, is that you can follow anyone, anywhere. It is open source, contains no ads or tracking, and largely survives on the good will of the thousands of people who run the sites.

Honestly, it's not all great. Some of it isn't even good. Like those early Internet days, it is messy and confusing to figure out what you should join and where everyone else is. There's only about 12 million users, but lots of them are weird and funny, and many are my friends. It is chaotic and argumentative, but it feels like a kind of family.

If you're intrigued about the Fediverse, then check out this series of essays by Elena Rossini, which includes a great 4 minute video that you can share with friends.

Everything old is new again

Anyways, all of this reminded me of the days when I used to run this sort of stuff.

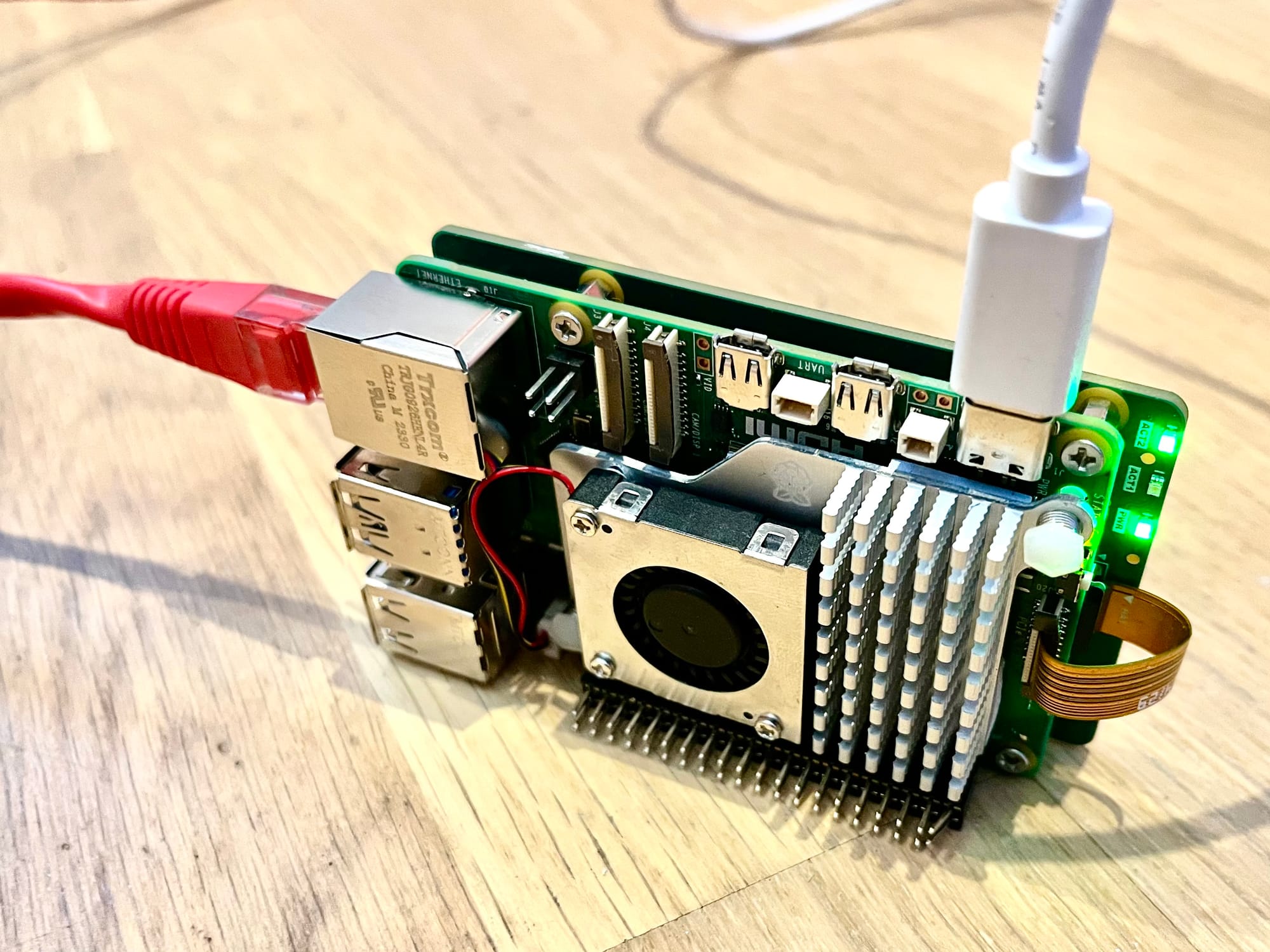

I worked it out: Output Arts has been paying almost £1000 a year on Internet services and subscriptions that I could run myself. I pay for high-speed fibre at home anyway and the hardware that now runs everything cost about £250 and draws a measly 5W. Instead of enriching a few billionaires further, we can support a bunch of projects that make the world a better place and save money.

I recognise that I have specific skills that let me do this, but most of them I learned when I was a teenager – chances are that there's a teenager in your life who could do the same. Fibre at home, ever cheaper computer hardware and a wealth of free, open source software makes this stuff easier than it has ever been. Even if you don't fancy having to set up hardware at your house, there's lots of companies that aren't Amazon who will run it for you – like the excellent Mythic Beasts.

Self-hosting is undoubtedly more work, but it's also rewarding and punk, and in some small way makes for a more diverse and interesting world.

As artists, I feel like that should be what we stand for.

Technical details for the geeks in the audience

The hardware:

- Raspberry Pi 5 16GB

- Raspberry Pi Active Cooler

- 52Pi PD Power Extension Board

- PineBoards HatDrive! Dual m.2 NVMe HAT (company now closed-down, but some stock out in the wild and alternatives exist)

- 2 x Raspberry Pi SSD 512GB

- 32GB Class 10 A1 microSD card

The host software:

- Ubuntu Server 25.04 – on the microSD card

- OpenZFS – mirror on the SSDs for data and some volatile/config directories*

- Docker – container management

- nginx – reverse proxying and static site serving

- certbot – SSL certificate management

* If you put a bunch of things like /var/log, /var/lib/{docker,nginx} and /etc/{letsencrypt,nginx} in the ZFS pool, then a microSD card root will last for years and allows for relatively easy, low-downtime upgrading of the OS by just swapping in a new, pre-built card and importing the pool.

The apps:

- Ghost – www.outputarts.com

- PeerTube – video.outputarts.com

- Formbricks – online surveys

- Forgejo – source code management

- Nextcloud – file storage and collaboration tools

- Grafana/Prometheus – statistics and monitoring

- plus a few custom project sites and apps (a mix of static HTML and containerised Python web apps)

All of the apps are docker compose projects with all config and data stored in the project directory as bind mounts. Each of these project directories is a ZFS sub-volume in /srv, this allows all of the usual nice things that ZFS gives you like independent storage limits, snapshots, compression and block size settings.